By Fatos Fico*

We all know the story of spinach, a 19th-century scientist who misplaced a decimal point and magically transformed spinach into a superfood with ten times the iron content. For decades, this “truth” shaped our diets around spinach and gave birth to the famous character, Popeye the Sailor (known in Albania as Braccio di Ferro). The biggest irony? Even the story of the misplaced decimal point is a myth—one beautiful lie followed by another lie.

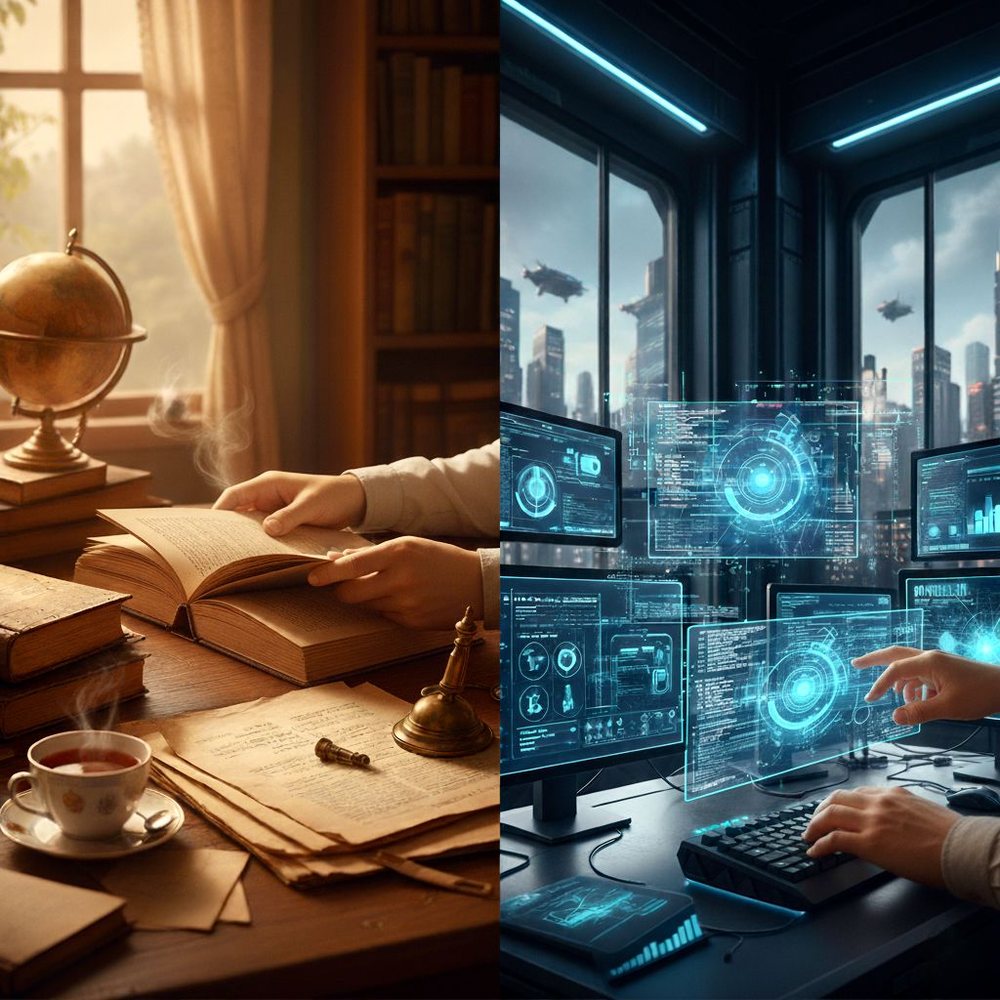

Today, as we increasingly use and even stand on the threshold of governance aided by Artificial Intelligence (AI), this old myth is not just a historical anecdote. It is a warning. The promise of efficient decision-making or governance based on previous data hides a pitfall: the danger of what we might call “Popeye Intelligence” – Popeye, the 20th-century American cartoon character, the sailor who gained superhuman strength and supernatural confidence every time he consumed a can of spinach. Similarly, decision-making and governance can gain a false sense of absolute strength and determination by relying on the beautifully packaged data served up by an AI system.

The process is simple and has four steps.

Step 1: Field with incorrect "spinach", existing data

Artificial Intelligence is not an oracle of truth; it is a mirror of the data it feeds on. Its food is the internet and existing databases – a gigantic, unorganized field, full of “spinach” of all kinds. This “digital spinach” contains social prejudices, outdated statistics, rejected studies, fake news and an infinity of “comma errors” of modern times. For the Albanian context, where structured and verified data are even scarcer, this problem is even more acute.

Step 2: “The Spinach Box”, the AI-powered report

The job of an AI system is not to distinguish truth from lies, but to build answers and models. It takes all the raw, contradictory and often wrong information and processes it. It filters it, analyzes it and packages it into a clean form: a report, a graph, a policy brief. This final product is the “spinach box”. It is polished, sealed and appears completely objective. It neatly hides the poor quality of the raw material and presents its conclusions with a mathematical certainty that no human expert would dare to dispute.

Step 3: Creating and consuming “Popeye Intelligence”

This is where the real danger begins. A decision maker, a businessman, or even a student, lured by the promise of quick and “scientific” decision-making, makes this “spinach box” his own. Faced with the complexity of decision-making and the pressure to make decisions quickly, this AI-packaged report seems like a salvation – not just a shortcut and a quick fix, but because it offers the perfect excuse to bypass the hardest part of decision-making: personal responsibility and managing uncertainty. The machine offers a definitive “yes” or “no,” freeing the decision-maker from the burden of doubt.

The moment a user accepts this product as an irrefutable fact, “Popeye Intelligence” is born, or the strength and determination that a decision-maker gains based on ‘wrong’ data, but beautifully packaged by a machine. Armed with this artificial certainty, the decision-maker, the businessman, the student, goes before the public, the shareholders, the class and announces a new action, a new solution, a new policy, not as a proposal, but as the only logical solution. He feels strong, visionary and infallible. He feels like Popeye (Braccio di Ferro).

Step 4: The consequences of the force of illusion

Imagine a decision maker or businessman asking AI: “Where should we invest to increase income/well-being?” Based on online data, the AI might recommend building a luxury resort because tourism generates more digital “noise” instead of a regional school. A decision with “Popeye Intelligence” would proclaim this as a data-driven strategic project, while in reality it has ignored a vital social need. The decision seems strong, but it is unrealistic, because it is disconnected from human reality.

The biggest risk of integrating AI into decision-making, research, governance, etc. is not a Hollywood scenario where machines take over. It is a much more insidious trap, where we, the people, voluntarily surrender our critical judgment in the face of the lure of artificial certainty.

The challenge is not whether to use AI, but how. AI has proven to be an excellent tool for discovering new things in medicine, optimizing energy networks, and in many other areas, AI has extraordinary potential. But the danger of 'Popeye Intelligence' arises precisely when the tool becomes an oracle, and analysis becomes an order. Will it be used as a decision-making "black box" that is blindly trusted, or as a transparent tool that helps human experts analyze complexity and abundant data without hiding it?

Before we rush into a future run by algorithms, we should remember the simple lesson of a box of spinach: what appears to be a source of strength may very often be just a beautifully packaged myth. True leadership comes not from consuming processed data, but from the wisdom to know when to question the machine itself./monitor